AI advancements raise questions

Researchers consider ethical issues as they work on a variety of projects

AI advancements raise questions

Researchers consider ethical issues as they work on a variety of projects

The Three Laws

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

— From the “Handbook of Robotics, 56th Edition, 2058 A.D.” in Isaac Asimov’s “I Robot”

Published in 1950, Isaac Asimov’s classic science-fiction novel, “I, Robot,” anticipated today’s debate about the future of artificial intelligence, a field involving machines that can learn.

“AI” has become a buzzword as more devices are programmed to assist people and handle repetitive tasks. Sales of virtual digital assistants like Amazon’s Alexa, for example, are expected to reach $15.8 billion by 2021.

At the same time, some observers worry about workers being replaced by machines. Self-driving vehicles, for example, eventually are expected to eliminate the need for cab and truck drivers.

Virginia’s universities are involved in a number of research projects aimed at unlocking the potential of artificial intelligence.

They say it’s already a wild ride, with advances coming at a speed that they could not have imagined a few years ago. Ethical questions are coming quickly, too.

Milos Manic, a Virginia Commonwealth University computer scientist, raised questions about the future of mankind’s relationship with artificial intelligence at the 2018 International Conference on e-Society, e-Learning and e-Technologies (ICSLT 2018) in London earlier this year.

“How do you teach a computer to feel, love, or dream, or forget?“ Manic asked.

“Also, as [we enable] machines to think and learn, how do we ensure morality and ethics in their future decisions? How do we replicate something we do not understand? And ultimately, can AI explain itself and get us to trust it?”

Robotic peacekeepers

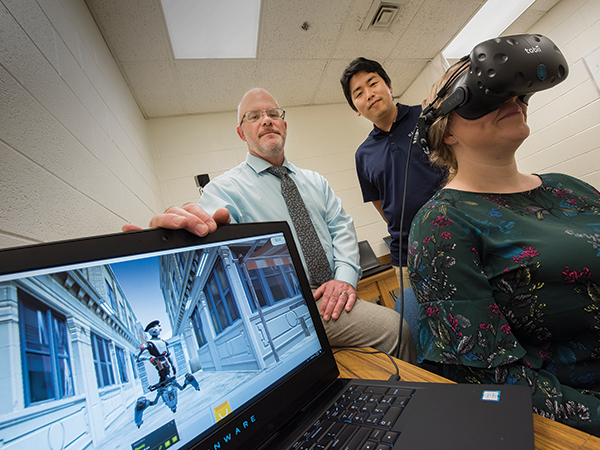

In fact, James Bliss, a psychologist at Old Dominion University, is examining the issue of trust between robots and humans. He is completing work on a project, funded by a nearly $800,000 grant from the Air Force, which involves participants from a variety of countries.

In fact, James Bliss, a psychologist at Old Dominion University, is examining the issue of trust between robots and humans. He is completing work on a project, funded by a nearly $800,000 grant from the Air Force, which involves participants from a variety of countries.

“The U.S. military is interested in the feasibility of employing robotic peacekeepers. However, little is known about how civilian populations will react to such robots,” Bliss says. “The situation may be further complicated if peacekeeping robots are given the power to enforce their will when situations escalate.”

His project uses computer-generated simulations to gauge how people would react when confronted by a security robot. Variations include response to commands given by an unarmed robot, one with a nonlethal device (such as a Taser) or one carrying a firearm.

Bliss says countries differ in their approach to arming autonomous robots, which already are being used in a variety of security situations.

For example, robots used for security in private industry in the U.S. typically monitor areas passively, Bliss says. But an airport in China has a security robot armed with a Taser. “It’s really a shifting landscape,” he says.

Bliss adds that, while robots had a presence in industry for years, they now are more widely used, and they’re getting smarter.

As robots learn beyond their initial programming, he says, important questions will have to be resolved. Like humans, robots may make mistakes.

Another Old Dominion researcher, Khan Iftekharuddin, chair of the university’s Department of Electrical and Computer Engineering, is working with graduate students on a technology using thought commands to instruct robots to help the disabled with daily tasks or enter a danger zone to help a soldier.

Iftekharuddin says NATO (the North Atlantic Treaty Organization), which has frequently collaborated with ODU researchers, is particularly interested in developing the technology.

Sharing the wheel

At Virginia Tech, researchers have gained national recognition for their research on autonomous vehicles.

Azim Eskandarian, head of the Department of Mechanical Engineering, says the more than 30,000 motor vehicle deaths recorded each year in the U.S. are largely caused by human error.

“If you replace humans [in driving], you’re solving 90 percent of accidents. I believe autonomous driving will have a strong impact on overall safety,” Eskandarian says.

He also believes that the widespread deployment of autonomous vehicles will change transportation dramatically, possibly leading to less traffic congestion.

But he adds researchers face many technical challenges before autonomous vehicles see widespread use. Even then, he expects humans and machines to share driving responsibilities for a time.

Commercial transportation might be one area of shared driving responsibility, he says. Human drivers might give up some of their time behind the wheel to reduce fatigue.

Internet’s dark side

Bert Huang, who directs the machine-learning laboratory at Virginia Tech, says he has no idea what artificial intelligence in 2050 may look like, given the unprecedented pace of recent change.

“I am shocked at what has changed in the world in the last three years,” Huang says.

One of the concerns of scientists, Huang says, is that algorithm-driven systems are evolving faster than the scientific community can keep up. Scientists want to incorporate concepts like fairness, accountability and transparency in these systems.

“A few years ago, we thought of the internet as a wonderful tool to connect us. We could get to know everyone,” Huang says.

Instead, he says, scientists are finding that algorithms in social-media systems have the potential — through politically charged content recommended to users — to divide people into opposing camps.

“People have been radicalized, because [the content] is more engaging,” Huang laments.

Robot-assisted surgery

At the University of Virginia, increasing the safety of robot-assisted surgery has become a research focus of Homa Alemzadeh, an assistant professor of electrical and computer engineering in the School of Engineering and Applied Science.

Last year, 877,000 robot-assisted surgeries were performed across the country. But Alemzadeh and her colleagues also discovered more than 10,000 adverse incidents, with 1,391 cases involving patient injury and 144 resulting in death.

Alemzadeh says the role of surgeons and physicians will change significantly as robots become more prominent in medicine and patient treatment.

Although robotic surgical systems will handle many procedures, surgeons and other medical professionals still will be in charge of planning and monitoring treatments as well as programming the machines.

“This will actually raise several challenges and interesting questions in terms of ethical and legal issues, trust in automation, and safety validation and certification of future systems,” Alemzadeh says.

Asimov anticipated those types of questions when “I, Robot” was published more than a half century ago. He left it up to 21st-century researchers to find the answers.